OpenAI is the gateway platform to our industry’s next major revolution, leading to a massive increase in individual, team and company productivity.

If you’re trying to understand the impact of Large Language Models on developer productivity you should definitely be following recent work by Simon Willison, creator of Datasette, and Django OG. He’s documenting his AI assisted code journey, and it’s fascinating.

He recently wrote a post – AI-enhanced development makes me more ambitious with my projects – which is canon.

As an experienced developer, ChatGPT (and GitHub Copilot) save me an enormous amount of “figuring things out” time. For everything from writing a for loop in Bash to remembering how to make a cross-domain CORS request in JavaScript—I don’t need to even look things up any more, I can just prompt it and get the right answer 80% of the time.

This doesn’t just make me more productive: it lowers my bar for when a project is worth investing time in at all.

In the past I’ve had plenty of ideas for projects which I’ve ruled out because they would take a day—or days—of work to get to a point where they’re useful. I have enough other stuff to build already!

But if ChatGPT can drop that down to an hour or less, those projects can suddenly become viable.

An hour or less. Viability. We have spent the last few years talking about code to cloud, but the next revolution is about idea to code. What happens when time to learn a new technology is no longer a constraint for building something new? Of course the software still needs debugging. The developer can’t expect perfect results. Hallucinations happen. Security and prompt injection is going to be an issue. But the power is there. It’s been unleashed.

In trying to understand the impact of a new technology or process on the industry it’s always worth looking back to history in order to better model and understand what’s actually happening now, and what’s likely to happen in future. In trying to understand the likely impact of Large Language Models (LLMs) and AI then on the practice of tech I have therefore been considering how we got here.

I have been thinking about the trend my colleague Stephen O’Grady described in his seminal work The New Kingmakers, namely the increasing influence of software developers and engineering teams on businesses, their associated increasing influence on decisions about the tech used to build apps and digital services, and indeed, to create and sustain companies. The three megatrends underpinning the New Kingmakers phenomenon were Open Source, The Cloud and Online Learning and Sharing. Well now a fourth has arrived – AI assisted code.

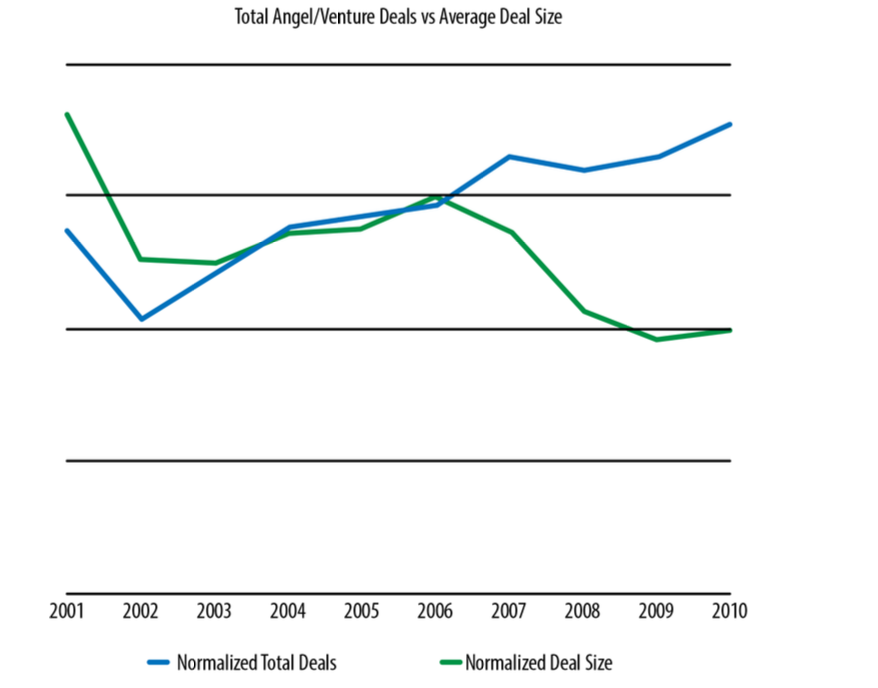

Some years ago we had a conversation with a guy called Chris Tacy which really struck us. Chris had been tracking investment deals in tech and he showed us this chart, which later found its way into Stephen’s book.

We’re looking at venture deals against average deal size from 2001 to 2010.

The classic package of people and technology required to build a startup is worth tracking – which maps to average deal size. Leading into the year 2000 timeframe, before the Dotcom Crash – Big Vendors were still in the game. With venture funding looking something like EMC for storage, Oracle for database, Cisco for networking, BEA Weblogic for application server and 10 million dollars for marketing. So 50 million dollars to start scaling your startup. eBay relied on Oracle database for the longest time. So did Salesforce. So you would have to ask for permission, which is to say a lot of money, persuading VCs to make a reasonably big bet on you.

Open Source was already in place and was making an impact. YouTube launched on MySQL in 2005. But the arrival of AWS in 2006 was a truly game-changing disruption.

VC as an industry was maturing so until 2006 of course the number of deals was increasing. Finance was getting more interested in VC lead tech innovation. We were founding more startups. But what happens in the chart in 2006 is a sudden divergence.

The cost of creating a startup goes down dramatically. Deal sizes crater. The number of startups being funded significantly increases. With AWS in play the cost of creating a startup was no longer what it was. We could try things in a way that we never could before.

The need to ask for permission to create new businesses was to an extent removed. There was also an industrialisation of the company creation process through accelerators. Y Combinator was a great model for creating lots of small trees. Lots of trees, some of which would bear flowers and then fruit.

So we got really excited as an industry. In fact, we over-rotated. A new breed of VCs, led by Andreessen Horowitz leaned into the new opportunity. Seed funding became routine as people made their exits and started investing in others. We moved into the era of Software Eating The World. We were pouring money into more companies with smaller initial deal sizes.

Of course we then went big again, and deal sizes became outlandish, but the ability to write software, and create a new digital service, and scale it, with much less permission required? There was no going back.

So let’s look at where we are in 2023 and why Simon Willison is a bellwether, and how Copilot and ChatGPT are changing his working methods. Simon is protective of his time. I’m not a big fan of Full Stack engineer as a term, but if anyone is Full Stack, then Simon is. But of course he doesn’t know everything. But he knows how things fit together. He understands scaffolding, integration, and core engineering patterns. He always has a list of things in his mind he’d like to try.

But learning new things has an overhead, like an activation energy in a chemical reaction. It needs a catalyst. Apparently that agent has now arrived.

My plan was to have my intercepting fetch() call POST the JSON data to my own Datasette Cloud instance, similar to how I record scraped Hacker News listings as described in this post about Datasette’s new write API.

One big problem: this means that code running on the chat.openai.com domain needs to POST JSON to another server. And that means the other server needs to be serving CORS headers.

Datasette Cloud doesn’t (yet) support CORS—and I wasn’t about to implement a new production feature there just so I could solve a problem for this prototype.

What I really needed was some kind of CORS proxy… a URL running somewhere which forwards traffic on to Datasette Cloud but adds CORS headers to enable it to be called from elsewhere.

This represents another potential blocking point: do I really want to implement an entire proxy web application just for this little project?

Here’s my next ChatGPT prompt:

“Write a web app in python Starlette which has CORS enabled—including for the auth header—and forwards all incoming requests to any path to another server host specified in an environment variable”

I like Starlette and I know it has CORS support and is great for writing proxies. I was hopeful that GPT-4 had seen its documentation before the September 2021 cut-off date.

ChatGPT wrote me some very solid code!

Which is to say we’re now in a completely new environment. We’ve now got this new toolset. If we think about the previous era of the New Kingmakers. AWS, Open Source and GitHub. All of that stuff came together to help people learn and build. We’re at that point again.

In a recent post The future just happened: Developer Experience and AI are now inextricably linked I described the huge change in developer experience driven by the new tools

2022 everything changed. We woke up and developer experience was mediated by AI. It turns out that AI isn’t going mainstream because enough developers know how to program using it, but rather it’s becoming evenly distributed because it’s fundamental to how developers are going to work going forward. GitHub Copilot went generally available in June 2022. OpenAI launched ChatGPT in November 2022. We’ve had the decade of software eating the world. Now it’s AI’s turn.

While there are some folks out there worried that developers will be replaced by AIs I tend to think we’ll instead see an acceleration. Spreadsheets didn’t get rid of the need for calculations in business. The golden era for developers isn’t over. AI makes it easier than ever to learn new skillsets.

Developers are going to be freed up to do incredible work in a way they never have before. Another permission blocker – their own time to learn – is being removed. If they want to do something they can build it.

If you have an idea you can create a tool. If you have an idea for an application, you can build that application and the price of making new working applications has cratered.

The costs associated with doing a new thing, creating a new thing, developing a new app are increasingly eliminated.

So what about infrastructure? You said OpenAI is the new AWS right?

Ok just to be clear, in this post I am arguing that OpenAI, and LLMs and Generative AI more broadly, are the next big revolution to make developers more productive. It’s not that ChatGPT and associated tools compete directly with AWS. Yet.

If we think about the Tacy chart above, I expect to see changes in how projects and companies are founded and scaled. We’re going to see more startups, probably with smaller teams, doing more impactful work. But then I also argued that serverless could see the creation of the first billion dollar single person startup, for very similar reasons to those in this post. AI could be what gets us there.

So about infrastructure. There is absolutely no doubt that LLMs and ChatGPT are going to reinforce the moat that Microsoft has established with its ownership of GitHub and Visual Studio Code. Microsoft owns where developers work. With the addition of AI things get even more interesting. Because while developers love those properties, the same can’t be said of Microsoft Azure. And yet… the epic opportunity for Microsoft is to make Azure a fait accompli. Which is happening now with OpenAI. Everyone using ChatGPT is using services running on Azure.

So what about a toolchain that eliminated Azure as a gating factor for developers building apps in GitHub and Code? Which is to say, Microsoft has the opportunity to create a once and future developer experience which finally and properly brings the pain to AWS. No other vendor has remotely the developer assets to pull this off. GitHub was the ultimate bargain for what comes next.

Stephen has written a lot about primitives and opinions, and recently asked whether a cloud provider would be able to offer a PaaS-like experience, but more flexible, less opinionated, offering the best of both IaaS and PaaS, mediated by large language models and generative techniques for infrastructure deployment and management. Azure security issues notwithstanding. Yes it could be that Microsoft significantly benefits.

AWS, unusually, finds itself on the back foot. Now it wants to lead a coalition of the models. Just today this happened Announcing New Tools for Building with Generative AI on AWS with a focus on Foundation Models (FMs) [that don’t come from OpenAI.] Multi-model is the new multi-cloud.

The potential of FMs is incredibly exciting. But, we are still in the very early days. While ChatGPT has been the first broad generative AI experience to catch customers’ attention, most folks studying generative AI have quickly come to realize that several companies have been working on FMs for years, and there are several different FMs available—each with unique strengths and characteristics. As we’ve seen over the years with fast-moving technologies, and in the evolution of ML, things change rapidly. We expect new architectures to arise in the future, and this diversity of FMs will set off a wave of innovation. We’re already seeing new application experiences never seen before. AWS customers have asked us how they can quickly take advantage of what is out there today (and what is likely coming tomorrow) and quickly begin using FMs and generative AI within their businesses and organizations to drive new levels of productivity and transform their offerings.

It will be interesting to see what developers make of AWS new services in this arena. It’s code assistant CodeWhisperer also went GA today, alongside new dedicated instances for machine learning.

In the final analysis then, we stand at quite the industry crossroads. Developers remain the new kingmakers, and good ones will be more productive than ever. I expect the new era to be industrialised in terms of creating new products, projects and companies. Incumbents won’t stand still, but in late 2022 everything changed, and now it’s about watching and building, as the change shakes out. And right now endlessly fascinating new AI companies, products, and services are indeed being created on a seemingly hourly basis.

disclosure: AWS, GitHub and Microsoft are all clients. This post was not sponsored.

Doug K says:

April 13, 2023 at 10:16 pm

this is the single aspect of LLMs that seems to me truly innovative.

The LLMs so far have shown themselves utterly unreliable and generators of falsity. This is great for ad copy but I struggle to see any other field of work which could use the outputs. Brandolini’s Law ensures the efforts of correcting the LLM output will be more expensive than doing the job right in the first place.

But with coding they appear able to reliably produce nearly functional code. It’s interesting philosophically to wonder how this happens..

Ludovic Leforestier says:

April 18, 2023 at 3:36 pm

Really interesting take James. In his book, Shoe Dog, Nike’s founder Phil Knight writes about his difficult relationship with banks as the lack of cash flow hampered his company’s development.

This is less an obstacle now, technology is also easy to get and deploy.

We could indeed see many more flowers –and hopefully more fruits?

James Governor says:

April 26, 2023 at 6:18 pm

great point Ludovic.