One year ago next week, GitHub announced the technical preview of Copilot, which it described as an “AI pair programmer that helps you write better code.” It was an AI-model trained on a very large dataset of code, and interest in the technology was high. As was, in some corners, the level of concern about issues ranging from copyright and licensing to security.

A year later, and GitHub has now announced that the product is out of technical preview and generally available.

finally. this is good news. https://t.co/huH8KwvY8e

— Oslo Filet (@monkchips) June 21, 2022

Over the last twelve months, we’ve fielded many questions about Copilot. It only seemed fitting, therefore, to return to the Q&A format to look at where the product is today and what it suggests about where it – and the issues around it – are headed moving forward.

Q: Let’s start with the basics: what is Copilot?

A: On a basic level, you can think of it like autocomplete / intellisense. Developers have had these capabilities for decades. While one is writing code – most often in R in RStudio, in my case – the IDE is watching the typed output in real time and trying to guess at the intent. In some cases it’s just to save the effort of typing it, or in others it’s because the user might not remember the exact syntax. It’s based on the same principles, to some degree, as the autocorrect on your phone as you type a text message.

Where Copilot differs and goes further, however, is that it’s not blindly doing a trivial lookup to determine whether or not what you’re typing matches a specific function name, it’s attempting to understand the entirety of the code and the intent behind it. This is a major leap from just code completion; in certain cases, depending on what you’re doing and how novel the task is, it can and will write the code for you. And not just code, but comments:

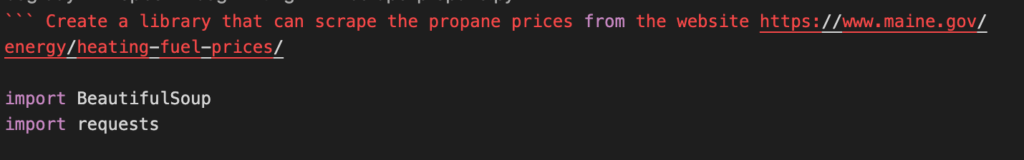

Copilot, for example, wrote this comment for me based on three or four words and a link:

Q: Is this an entirely new idea? Has this never been done before?

A: Short answer is no. In terms of how it extends the autocomplete concept into code generation, it’s comparable to Tabnine or Kite, though while the former just took more money it’s unclear what the status of the latter is at present as their last round was three years ago, they discontinued their individual project and their social media has gone dark.

So yes, we’ve seen this idea before. It’s the first time, however, that we’ve seen an entrance into this market made by a company of this scale and with this level of resources – be that financial, adjacent relevant assets and lastly in terms of having a large corpus of data ready to hand.

Q: Why did it take a year to GA?

A: Only GitHub can answer that, but the reality is that this is a very complicated service to roll out. Balancing pricing between what’s acceptable and what your costs are for a non-trivial amount of compute is tricky. So you have questions of price sensitivity, operational costs per user and how to appropriately package all of the above – not to mention the legality of it all, which presumably we’ll come back to – which take time to resolve.

Q: Who is it for?

A: This seems like a basic question with a basic answer: developers. But the reality is more complicated because you have to answer the question of: which developers? And to answer that question, you have to know what it’s actually for.

Q: What it’s for? Isn’t that obvious: helping developers to write code?

A: Code, yes, but which code. For example, if you’re a developer and want to use Copilot to help you write applications on the side, for your personal usage, that’s a decision without much if any risk. But if I’m an enterprise developer – or their boss, further consideration is probably in order. Three that tend to be top of mind are:

- Quality: First, you’d want to know if you can trust the code Copilot generates: is it good quality, and therefore saving you time? Or is it more prone to spitting out gibberish, like you might see from some low code platforms? Will it actually save time?

-

Security: You’d also want to know if you can trust it from a security standpoint. One study has 40% of the generated code introducing vulnerabilities.

-

Legality: And perhaps most critically, what is the legality of the output?

There are other considerations, such as what areas of the codebase you’d expect Copilot to contribute the most to – just boilerplate? Or could Copilot genuinely be of assistance in writing novel applications without a great deal of precedent. But those are really at the margins. Copilot’s relevance is likely to depend on higher order factors.

Q: And what are the assessments of all of the above?

A: To some extent, TBD as we wait and see what the reactions are at scale. And it will obviously vary from user to user. But as for quality, while that’s challenging to assess objectively the overwhelming sentiment to date has leaned towards being impressed – often extraordinarily so. The most common reaction, in fact, is amazement. Multiple tweets, for example, have referenced Clarke’s quote that sufficiently impressive technology is indistinguishable from magic. And it’s worth noting that some of the developers who are most impressed by Copilot are both good and senior. For example:

I might be done with work today because Copilot successfully generated half of the boilerplate I was planning to work on.

— Jaana Dogan ヤナ ドガン (@rakyll) June 10, 2022

To be fair, Jaana works at GitHub now, but she held similar sentiments prior. So while the jury is out, quality at this point does not appear to be a major issue.

Q: What about security?

A: Again, we’ll need more evidence to make a firm call one way or another, but I don’t see any objections thus far as being particularly damning. Forty percent of code sounds like a lot until you consider the fact that most companies won’t have a quantitative human baseline to compare against. It’s entirely possible that 40% is an improvement in many situations; certainly there is no shortage of enterprise vulnerabilities today in a market in which Copilot is just getting off the ground. And enterprises are already consuming large volumes of external software, both open source and otherwise, that is of uncertain quality from a security standpoint – which is why security investments are skyrocketing.

Throw in the fact that as GitHub ramps up this technology, its ability to scan for vulnerabilities in real time will presumably improve and overall, I don’t view security as a showstopper for Copilot either.

Q: Ok then, what about the legality of all of this?

A: Well, there are multiple potential dimensions of concern here.

- First, is GitHub potentially liable for using, as one example, GPL-licensed code as a training input and outputting non-GPL licensed code?

- Second, would a user of said non-GPL licensed code be potentially liable for using it?

- Lastly, the actual legality aside, what are the wider “spirit of the law” implications to what’s being done here? I.e. is there an Dr. Ian Malcom-style “your [engineers] were so preoccupied with whether they could, they didn’t stop to think if they should” question to be asked here?

Q: Let’s start with the basics: is GitHub liable?

A: GitHub’s belief – as evidenced by the fact that they both introduced this product and brought it to general availability, not to mention the briefings we’ve had on the project – is that because it is machines and algorithms at work here, not humans, the copyright and licensing issues are non-applicable. I am in no way qualified to have an opinion on that legal analysis, but it’s worth noting that this happens to be the consensus of the IP lawyers I know and trust on these subjects. For an example of that, go listen to Luis Villa’s take on this podcast.

So the short answer is that as far as GitHub’s concerned, the original licenses of the input codes are not applicable to the output which should, according to the company, in most cases be an entirely original composition merely informed by the code it was trained on.

Q: Does that mean that per the second question above, the user doesn’t have to worry about it either?

A: That’s clearly GitHub’s contention. They don’t address that explicitly, but on the Copilot page it is directly implied. They say:

GitHub Copilot is a tool, like a compiler or a pen. GitHub does not own the suggestions GitHub Copilot generates. The code you write with GitHub Copilot’s help belongs to you, and you are responsible for it. (emphasis mine)

In that line GitHub is asserting two things. They’re saying explicitly that the output is not under some new GitHub license, it’s as if you wrote it yourself unassisted. And in doing so they are saying implicitly that the license of the code that was used to train the system is immaterial. Because if the original licenses followed this generated code, that code would not, in the case of copyleft licenses at least, belong to you.

There are some questions at the margins – in the tiny percentage of cases where it spits something out verbatim, what happens? – but overall, pending new case law, the consensus of people actually qualified to have opinions on this, i.e. not people like myself, seems to be that this is legal for both GitHub and users.

Q: Ok, let’s assume this follows and obeys the letter of the law. What about the spirit of the law?

A: There are basically two ways to think about that, in my view. The idealist says this is wrong and we need filters or other mechanisms to prevent this type of moral, if not legal, violation. The pragmatist says if the optimists about the technology are right and this or technologies like it end up becoming ubiquitous and are provably and defensibly legal, the spirit of the law won’t matter much. Unless new IP mechanisms, licensing or otherwise, are introduced to explicitly attack this kind of usage, at least.

There are certainly many in the idealist camp. Even advocates of permissively licensed projects – who would be losing, theoretically, attribution or similar – have reacted with dismay at the perceived injustice of Copilot’s transformation or lack thereof of projects carrying licenses like the BSD 3 Clause or the MIT.

Copyleft license advocates, by contrast, stand to lose even more, because their expectation upon placing such a license on their project – presuming they did it deliberately and with intention rather than merely following the lead of popular projects like Linux or MySQL – was that the reciprocal requirements those licenses impose would follow that code in perpetuity. Instead, the GPL code is merely input for an output that carries no such license and no such requirements. And indeed, the FSF called for papers exploring just this subject. One of those, however, came to the conclusion that the activity here is, in fact, legal. As an aside, it’s also worth asking whether that organization specifically will command the sympathy of the markets here as they once might have given some of the callous personnel decisions it has made in recent years.

But ultimately, the question here isn’t whether there are moral issues at stake: there are. The question is whether they will matter from a practical standpoint.

The answer likely depends on whether the works Copilot produces are truly original. GitHub says the following on that:

The vast majority of the code that GitHub Copilot suggests has never been seen before. Our latest internal research shows that about 1% of the time, a suggestion may contain some code snippets longer than ~150 characters that matches the training set.

If GitHub gets that to 100%, and everything that comes out of it is entirely original – or what they call the “common, perhaps even universal, solution to the problem,” the objections here don’t go away but are more difficult to sustain.

There are, however, multiple independent claims that Copilot is potentially reproducing code from non-open source repos. If that’s the case, GitHub likely has a problem. If those are one offs, or if GitHub can correct that behavior, however – and possibly even if they’re not and it can’t – the evidence suggests that it probably won’t matter.

Consider that for the better part of a decade, the open source licensing trend has been towards permissive licenses that impose fewer requirements and restrictions upon users of the licensed assets. Consider that the majority of projects hosted on GitHub historically have carried no license at all. And consider that as open source has been repeatedly attacked by those who would seek to undermine its stability with licenses which masquerade as open source and erode bedrock principles, the collective reaction from the market has been, effectively, a shrug.

The inescapable conclusion – at least pending some unexpected explosion of outrage – is that the average developer has essentially come to take open source for granted. As such, it seems unlikely that open source communities will be able to generate outrage sufficient to materially change the course of what even critics reluctantly admit is a legal enterprise.

Is it possible that there’s pushback sufficient to compel GitHub to introduce filters or other mechanisms to prevent certain projects from being used to train Copilot? It’s possible. But certainly not probable. That being said, I think GitHub is introducing risk here to Microsoft by proxy, because the latter has laboriously been rehabbing its open source image for over a decade at this point and this, for many developers, is a black eye to those efforts. Notably, the most bitter criticism I see about Copilot tends to blame Microsoft, not GitHub.

Q: Let’s go back to the question of who and what this is for? The assertion was made that to know who this was for, you’d have to know what it was for, and that came down to some basic assessments from quality to legal and so on. The analysis above aside, what’s the assessment of what the market’s view on Copilot is right now?

A: The reactions to Copilot have been very interesting, and suggestive of a potential path. Most every developer we have spoken with, as described above, has come away impressed, even if reluctantly. That has been my impression as well, just in my personal usage.

It’s been impressive enough, in fact, that there are those asking the question as to whether the AI will eventually take jobs away from developers.

Ignoring Copilot’s intellectual property issues, are people worried that the code they potentially labored over for hours with dubious success can be replicated in seconds? Are some devs feeling the same fear that store cashiers have been feeling for over a decade now?

— Bryan Liles (@bryanl) July 5, 2021

That’s not a fear I happen to share, at least for the foreseeable future, but it speaks to the degree to which the technology has elicited some extraordinary reactions from some otherwise hard to impress audiences.

And yet I don’t talk to a lot of developers using Copilot, in earnest, in and on production workloads. Which makes me wonder if Copilot is going to go down an atypical path here.

Q: How do you mean?

A: There are some transformative technologies that land and are obviously useful immediately: cloud comes to mind. As primitive as EC2 was when it launched, for example, there wasn’t much question – and quickly – whether it was useful. There are other technologies, however, that require market acclimation.

I tell the story all the time, for example, of some of my early days as a consultant implementing CRM systems. One of the things a company I worked for pushed with a few clients was a hosted CRM system. Roughly half of onsite implementations at that time failed for one reason or another, so the argument was made to customers that you should let a company that implements these systems for a living set it up offsite and run it for them.

Every single time the reaction was horror: “the customer data is the most valuable data we have – it will exit the firewall over my dead body” was the prevailing sentiment. Within six years, however, every single one of these customers was on Salesforce.com.

The problem, of course, wasn’t the technology. It was that people weren’t ready for the technology, they weren’t acclimated to it. But if the value is evident, and they’re given time, companies will eventually adjust.

Developers likely will too.

Q: You think Copilot is ready to take off, then?

A: That depends on what timeframe we’re talking about, because it seems very probable that some more acclimation is going to be required. Roy Amara said once that “We tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run,” and I think that’s the case here.

I think the incredible nature of some of the initial Copilot developer experiences obscures the fact that developers will have to learn to trust it, just as they would a human developer. Even amongst developers that have no moral qualms about the service, you see comments like “it’s interesting, but I’m not paying them what I pay Netflix.” Which is a perfectly cromulent decision if you don’t trust the product at all. If you do trust the product, however, that’s nonsense: $10 is a relative pittance for a pair programmer that’s always available. It seems probable, then, that some of the initial hype will give way to more meager returns than would otherwise be expected for something so potentially transformative on an individual basis.

Over the longer term, however, I think Copilot – and its current and future competitors – are likely to be a staple of the developer experience.

Q: Why is that?

A: For those that ever leveraged Ruby on Rails when it was introduced, or virtually any other framework, think about that initial experience of getting set up. In the case of Rails, a few simple commands and all of the scaffolding of a basic website was built for you. Hours of tedium were abstracted, and even if it was done imperfectly in Rails or other frameworks, not having to start from scratch was an enormous win. As we have seen repeatedly with popular frameworks leading to surges in programming language traction, most recently with Flutter and Dart.

Copilot, Tabnine and others offer a similar ability to, at a minimum, avoid wasting time on scaffolding, boilerplate and the like. Which saves that most precious of commodities: developer time. That’s important and relevant individually, but for organizations desperate to improve their development velocity – which is effectively all of them at present – it’s potentially even more attractive. If enterprises could spend $10 a month and save their developers even a single hour, that’s an easy trade.

If, that is, the market can acclimate. Which I think it will.

Q: What’s the basis for that expectation? Simple value of time?

A: I think of it this way. In professional and in some instances amateur sports, there are recurring problems with PEDs. Highly competitive athletes looking for a competitive edge is one thing, and a not very surprising thing at that. What’s more notable is that some of those that use PEDs later confess that they didn’t necessarily want to and had concerns, but felt compelled to because an increasing number of their peers were doing so and they didn’t want to get left behind.

If the core hypothesis is correct that Copilot and products like it will save individual developers or teams of developers time – and it might not be, but the available evidence suggests that it is, or will be in time – developers might not ultimately have a choice.

If there are two otherwise comparable teams going in the same direction, and one of them is using an AI to enhance their performance and having it write any appreciable amount of code – even basic boilerplate – then the team using Copilot is likely to iterate faster. And in the end, that’s probably enough to guarantee this category life and relevance well into the future.

Q: So that’s about the category generally: what about Copilot specifically?

A: Well, as mentioned they are inarguably the best resourced product in market at present, and that is unlikely to change given GitHub’s inherent advantages in access to data, Microsoft’s deep pockets and so on. But it also is about more than that: it’s about the developer experience that Microsoft will be able to deliver both now and in future.

Q: Where does the developer experience tie in to all of this?

A: About twenty months ago I started talking about what I refer to as the developer experience gap. In the interim, attention to the developer experience has exploded because it’s an area both that developers have strong incentives to care about and that their employers are fixated on because they want their developers to be able to go faster.

The gist of the thesis in that piece was that the market had produced an overabundance of developer tools, but that the experience of having to evaluate and sift through them all, let alone integrating your choices into a toolchain that needed to be maintained, protected and operated was problematic.

Now consider what the combination of GitHub and Microsoft are able to deliver, at least on paper – the aforementioned “adjacent assets”: a well liked version control system, native and functionally improving CI/CD and issue tracking systems, security scanning, cloud development environments and, as of this week, a service that has the potential to write code for your developers so that they don’t have to. Oh, and they have the most popular IDE on the market in VS Code and, some recent missteps notwithstanding, a credible cloud platform.

That’s a lot of what developers need, and it’s all first party. Microsoft and GitHub aren’t there yet as far as tying it all together into a neat little seamless bundle, but the opportunity there is obvious.

Q: Last question: what does Copilot mean for open source?

A: We’re already over 3700 words; answering that might take another 3700. Let’s leave that for another time.

Disclosure: GitHub, Microsoft and Salesforce are RedMonk customers. Kite and TabNine are not currently RedMonk customers.

Recent Comments