Several times this year we’ve heard messages of social responsibility directed towards developers, issued from the stages of conference keynotes.

“The opportunity for us as developers to have broad, deep impact on all parts of society and all parts of the economy has never been greater. With this enormous opportunity, I believe, comes enormous responsibility.”

- Satya Nadella (Microsoft Build 2017)

“We think this is the best time in history to be a developer. Our craft is respected, our work has been elevated, and we’re in an era of rising demand. This is our time. … As much as it is our time, the stakes are high. We have responsibilities to our craft, to each other, and to the world that developers never had before.”

- Sam Ramji (Google Next 2017)

As we become increasingly reliant on technology across increasingly broad swaths of society, the people that create these technologies play increasingly impactful roles. Vendors themselves carry responsibility for producing secure, well-designed products. However, the tools themselves account for only a portion of the societal impact. The developers and operators that write, maintain, and implement code using these tools share in the obligation to build thoughtful, responsible systems. It is notable to watch vendors share this biblical/Churchillian/Spider-Man philosophy with their developer communities.

Ramji defined responsible action as creating systems that are secure, flexible, and open. Nadella asked developers to use design principles to enshrine the timeless values of:

1) empowering people with technology,

2) using inclusive design to help more people use technology and participate economically, and

3) building trust in technology by taking accountability for the algorithms and experiences they create.

Nadella explicitly referenced 1984 and Brave New World, and he called upon developers to ensure these dystopian societies do not become a reality. He asked developers to consider the impact of their decisions in relation to possible unintended consequences of technology. Though Nadella referred to himself as a ‘tech optimist’ it was fairly stark messaging to begin the conference.

While Microsoft directed more attention to the moral and ethical imperatives facing developers than we typically see from a keynote, the subsequent material highlighted just how difficult it is to be cognizant of inclusivity and unintended consequences in product design.

Microsoft’s most obvious showcase of empowering people with technology was the heartwarming story of Haiyan Zhang and Emma Lawton. Zhang built a wearable device dubbed the Emma to combat tremors associated with Parkinsons disease. It’s hard to see anything but upside in using technology to help people regain their physical abilities, and this was an excellent example of technological empowerment.

So proud to be onstage today with @ems_lawton and @satyanadella at #MSBuild – showing our work together to combat Parkinson's #Build2017 https://t.co/K6ysGUVPjs

— haiyan zhang (@haiyan) May 10, 2017

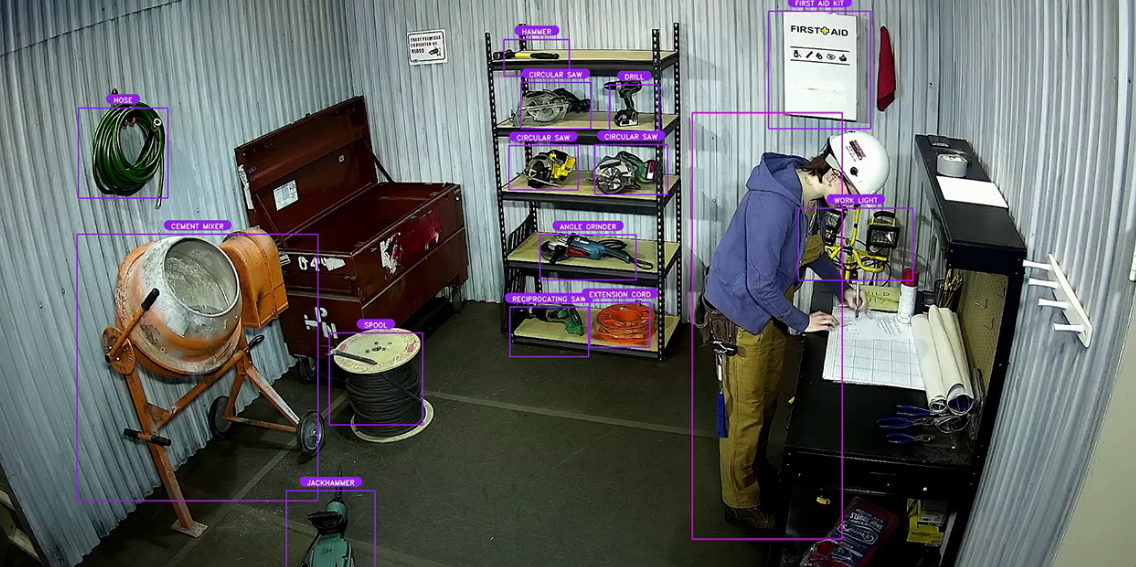

On the other hand, the job site safety demo caught my attention as an example of potential unintended consequences. In this demo, cameras were connected to an AI system, which in turn sent text messages to notify construction workers of potential safety hazards. Using technology to create safer workplaces is a laudable goal, and I think would have seen only upside to this goal unless I had been primed to look at this demo through the lens of inclusivity via my brother’s experiences.

My brother currently manages a warehouse. The team has grown substantially and they are now designing new processes to help scale their communication. These systems are little to speak of technologically; mostly they are considering using more written notices and text messaging. However, even these seemingly minor changes create a degree of turmoil for some people in the warehouse.

While all the members of the team have demonstrated their ability to succeed in their jobs as they currently exist, it is less clear that all the team members will be able to transition to a new system that relies more heavily on reading and writing. In our industry, where virtually all people read and write for a living, communicating by text is an easy skill to take for granted. However, the most recent U.S. Department of Education study found that 12% of adults in the United States have “below basic” skills in document literacy; if we extrapolate these rates to today’s population, it implies that there are roughly 30 million adults with limited ability to read and comprehend basic written instructions. A handful of these 30 million people work for my brother, and it is his great challenge to build a system that both supports a growing warehouse while also supporting his workers.

This is admittedly an anecdote, but it’s also a concrete example of how seemingly minor changes impact people in low-skilled jobs. When we watch people struggle to make the transition from an exclusively manual labor job to manual labor job with moderate literacy requirements, what will this mean for these workers as we begin introducing even more extensive technology? Perhaps more advanced technology will offer more assistive opportunities to my brother’s warehouse, either through translation services or text-to-speech software. Perhaps it will make these workers’ skills obsolete even more quickly. Unintended consequences are tricky like that.

This is not an argument to slow or halt technological progress. My colleague Steve recently posted thoughts in his newsletter about how the world does not owe you a living. I agree with that sentiment. We do not want a situation like Brave New World, in which the Controller argues, “we have our stability to think of. We don’t want to change. Every change is a menace to stability. That’s another reason why we’re so chary of applying new inventions.” That is not the goal.

We do, however, want a world where developers are cognizant of their impacts. We need developers to analyze their products and think about second order effects from a variety of perspectives as part of the software development process. We want to encourage technological progress, and we want to do right by people. This is one minor example that illustrates the difficulties of anticipating unintended consequences and considering who is empowered/disempowered with technology products. With one in four workers expecting their job to be eliminated via automation in the next decade, this small example is indicative of a much broader economic shift underway.

It is difficult to balance the benefits of technological advances with the complicated ethics of doing societal good. I appreciate both Microsoft and Google for bringing attention to the cause of developer ethics. More dialogue is needed in this area. I encourage more voices – both vendor and developer – to join the conversation about how to build ethically-sound, inclusive technology.

Disclaimer: Microsoft and Google are RedMonk clients and they covered T&E to their respective conferences.

Tracy says:

May 18, 2017 at 9:53 am

Reading the quotes, I can’t help but be reminded of Silicon Valley’s Gavin Belson: What is Hooli? Excellent question. Hooli isn’t just another high tech company. Hooli isn’t just about software. Hooli…Hooli is about people. Hooli is about innovative technology that makes a difference, transforming the world as we know it. Making the world a better place, through minimal message oriented transport layers. I firmly believe we can only achieve greatness if first we achieve goodness.

But seriously, great topic & whole-heartedly agree we do need more dialogue around. So thanks for this and the interesting insights into the warehouse & impact of tech on manual labour jobs.

Also agree it is something we want developers to be much more aware of and take responsibility for, the onus is on us. It’s not something we can leave to the corporations. The best talk I have seen on this area is ‘Will the New Industrial Revolution Lead to a Controlled Society or to a Creative Society?’ by Hans-Jürgen Kugler. As relevant today as it was when delivered (if not more so). https://www.youtube.com/watch?v=qBVMvM0oXrk

James Campbell says:

May 21, 2017 at 7:02 am

While better than seizing power without acknowledging responsibility, simply acknowledging responsibility does eliminate the need to take a more inclusive approach to wielding power in the first place. Rather than suggesting that developers ought to be more thoughtful and careful in the design and selection of products, I’d like to see more explicit care taken in ensuring that there are checks on any one class or group (developers, large tech companies) wielding power.

To me it seems that the clear lesson of the ‘great power => great responsibility’ dynamic is that it is not an individually and sustainably achievable balance. ‘Power corrupts; absolute power corrupts absolutely:’ when lots of humans are involved, the safer approach is to distribute power, not hope for a benevolent (class) leader.

Still, with that noted, it is great to hear the articulation of potential design criteria for the products and services they’re building, and I hope more dialogue about those criteria–and especially how to evaluate and encourage them–will indeed promote a brighter future.