Winston Bumpus of the DMTF joins us to give us an update on what the standards body has been doing in IT Management and cloud computing.

Download the episode directly right here, subscribe to the feed in iTunes or other podcatcher to have episodes downloaded automatically, or just click play below to listen to it right here:

Show Notes

- Winston and I did a video several years ago.

- Update on DMTF standards, like SMASH, OVF.

- What’s the DMTF’s cloud standards involvement at the moment? Interoperability, defining things, security – APIs, packaging.

- John asks about things that seem missing for cloud management and such.

- We speak about CIM, the models of IT stuff from the DMTF.

- Whatever happened to AMS (Application Management Specification)? dev/ops people are bumping up against this a lot.

- We talk about the possible pull to have developers more involved in infrastructure that cloud and dev/ops brings.

- Winston tells us about US government work in cloud that the DMTF has been helping with.

- John hits up more on OVF.

Transcript

As usual with these un-sponsored episodes, I haven’t spent time to clean up the transcript. If you see us saying something crazy, check the original audio first. There are time-codes where there were transcription problems.

Michael Coté: I just noticed that when Winston introduces himself, the audio very conveniently cuts out. So when you hear the introduction of the guest in this episode and there’s no sound, just insert in your mind the name Winston Bumpus who, as he says, is the President of the DMTF, and we’re really grateful to have him on this episode. It was a fun discussion. So with that enjoy the episode.

Well, hello everybody. It’s the 9th of July 2010, and this is the IT Management and Cloud Podcast, Episode Number 76. As we mentioned last episode we have a guest on us with this week, but first I, as always I’m one of your co-host Michael Coté available at peopleoverprocess.com. I’m joined by the other co-host as always.

John Willis: John M. Willis from OpsCode, mostly at John @opscode.com.

Michael Coté: And then would you like to introduce yourself guest?

Winston Bumpus: Yeah, this is Winston Bumpus, President of the DMTF, The Distributed Management Task Force, and my other full time job is I’m Director of Standards Architecture here at VMware, and I’m – go ahead.

Michael Coté: I was just going to say I’ll have to put up a link to the video, but we were joking before we were recording this, it’s been few years since we talked last and I remember the last time we talked, we did it in a video, and we were just kind of going over the various –- I think at that time was it SMASH that had been released or something like that and we were — that was the kind of opportunity but we were just kind of going over some of the standards and things that the DMTF had done and I was just hoping that we’d kind of get an update and have a few questions here and there about what your guy’s involvement in IT and cloud stuff is.

Winston Bumpus: Terrific, terrific, so I can give a quick thumbnail sketch and then maybe we — guys get some questions, we can go from there, does that make sense?

Michael Coté: Yeah, that would be fantastic.

John Willis: Sure.

Winston Bumpus: Great. So perfect, so yeah, we certainly the SMASH stuff, which is server management, probably couple of years ago when we talked, that was something important and now implemented in lots of servers and really trying to make management for servers standardized. It’s a place where customers — management interface customers don’t think that’s a place you need to innovate, it’s what you do with the management information that’s important. So service was a key piece and then we extended that into the desktop with a cleverer named — set of standards called DASH. So we had SMASH for the servers and then DASH for the desktops and now that’s embedded in various chipsets from companies like Broadcom, and Intel, and AMD.

So you can remotely manage and do power management and do other remote management of desktops and a lot of the enterprise desktops have that embedded today, all using the very similar technology. So beyond that certainly virtualization is really changing the landscape of enterprises, and so we’ve expanded in that space. One of the key standards that’s come out of there is a thing called OVF, the Open Virtualization Format and it’s a way to –- it’s metadata that you associate with a virtual machine image or multiple virtual machine images that allows you to have information about the resources that are required to deploy and things like that.

I liken it to and a good analogy is it’s kind of the MP3 of the data center, I’ve got an image the way I like it, and I can rip and burn an OVF of that particular image or group of images and deploy it some place else. So that was really around virtualization and you can see as we move into the cloud it’s becoming a key building block to be able to move workloads in the infrastructures of service.

So that’s kind of where we’ve been and then certainly moving the cloud space building on those technologies and we’ll be talking about that.

Michael Coté: I’ve noticed that you’ve been kind of on the cloud circuit of late, if you will, speaking at a lot of cloud conferences and things like that about –- I think especially over the past year or so there has been much discussion about standards for the cloud and things like that. I mean why don’t we just jump into like what –- I mean what’s the –- virtualization is an obvious sort of thing, it’s sort of like a building block for doing cloud standards but like what are you guys –- what’s the DMTF kind of seeing or helping with as far as cloud standards and things like that? What’s the involvement you guys are having?

Winston Bumpus: So one of the things we –- about a year or so ago, we launched an activity called the Open Cloud Standards Incubator, and because –- the reason we call an incubator, I mean it was really we didn’t have a set of specs to work on, we really needed to come together as an industry.

So a large group of companies came together and companies AMD, CA, Cisco, Citrix, CMC, Fujitsu, HP, Hitachi, IBM, Intel, Microsoft, Novel, Rackspace, Redhat, Savvis, SunGard, Sun Microsystems and VMwear are just — because its important that when you talk about standards the constituency is a big deal. I was talking to somebody early today about some standards that were worked on years ago in another organization and it was a good idea but it just didn’t fly because you didn’t have the right constituency.

So I think having the right players engaged is important. So we started this incubator and really the process of it, this kind of rapid rolling out of — getting together so we don’t spend a lot time on how we do it, we get right into the work of looking at what we need to do and the first thing that we really needed to do is agree on definitions, use cases and architectures. I mean we thought that that was an important piece. I used to say that if you had 50 people on the room you’d have 52 definitions of what cloud was.

So we really need to come around the industry and focus on that and then beyond that the key problems that we’re trying to solve is we think there were three key issues to be solved in cloud interoperability.

One is about portability, how do you move from one cloud to the next? The second one is, and maybe it’s most important, we see a lot of discussion on it, around security, that’s one of the issues that a lot of the folks who are looking at clouds are concerned about. If I move into this public cloud, do I have enhanced security concerns and the third thing is just interoperability, what are the interfaces so that it can tie into existing business infrastructure.

So the work in the DMTF is really focused around ATIs, packaging formats and the security work and with that we are actually working with our alliance partners and one of the new organizations that’s cropped up over the last year or so called the Cloud Security Alliance, I don’t know if you’ve heard of them.

Michael Coté: Right, I think we remember them.

Winston Bumpus: Yeah, but the cool thing about what they are doing is, they’ve got a really good group of, you know again constituency is important. That really got a lot of the key cyber security guys engaged in this activity and in addition their saying lets not go off and build new standards, lets talk about what are the best practices around cloud security that people should implement because they feel that the technology exists and there’s lots of good standards that are already there. So what it really is about enabling best practices.

So the DMTF is several alliances and that’s one we’ve recently formed or formed last year to be able to leverage that expertise as we are trying to solve the security issues in addition to the interoperability and portability issues that we are working on.

John Willis: Cool, so Winston, so I’ve been following DMTF, we were talking before the podcast about how long we’ve both been doing this. So I remember first getting involved with the DMTF back in mid stage, mid ‘90s, the management information format and then kind of being somewhat coarsely involved, never in the group but definitely following the pretty hard one, made the transition to kind of object formats and transition from desktop management to distributive management.

Winston Bumpus: Sure.

John Willis: And just watch — you know my excitement has always been kind of the name of this podcast, the IT management guys were the clicking clacker of IT management. And so I look at all what’s going in DMTF, in fact OVF has probably had a five-year hiatus down. When I left I was playing with a lot with WMI and Pegasus and CIM and stuff like that and then I kind of took a five-year dive into kind of WebOps distributed computing and then OVF kind of brought me back in.

So in preparation for this, I’ve probably been following OVF a little bit. I look back at DMTF and one of the thing I kind of saw was, it seems like to me and don’t take this as a disrespect for DMTF, that we are missing some critical pieces, there were some pieces that just kind of either got stuck in the mud or — because when I think about the problem today, I think about the problem being, this lifecycle from a management standpoint of provisioning, configuration management, assistance integration, kind of, automation lifecycle, key provisioning and all the components really that goes into that.

And when I look at what it appears from a service level looking at their website and not spending a whole lot of time in DMTF, it seems like that there is reasonable amount of focus on the provisioning with OVF and images but it seems like there is not much more configuration, system integration and then the manageability if I go back and look at CIM and WebM. I don’t see all that clear, it seems like to me there should be a bigger picture, am I making sense?

Winston Bumpus: Yeah, yeah you are and there’s two pieces. One of which is our website and so we’re actually — you know I would probably say its probably hard to find OVF on it today, you had to dig a little bit, so we’re actually doing a website redesign, so that’s a small niche, that’s the icing. I think there are some things that we’re doing, particularly around CMDB federation, that work is in the DMTF and ongoing, which I think is higher level abstraction piece. I think — you know as far as — you know we spend a fair amount of time.

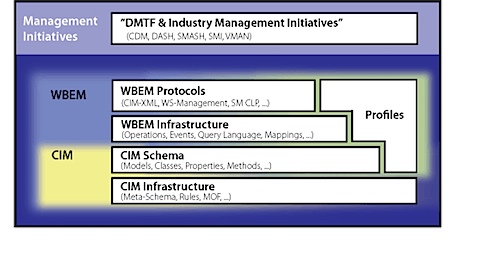

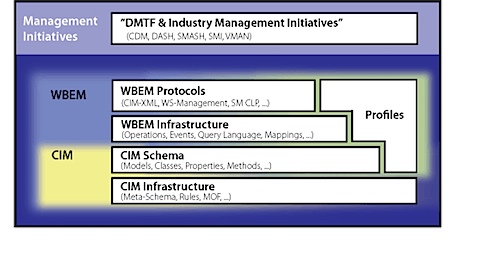

Certainly, the work we’ve done on CIM, the Common Information Model has been a major piece of the underlying technology, even OVF is based on it, all the rest of the technology leverages it. CIM, really the common information of the object model that we started work on, actually we started work on ‘96 John, so that was how long ago that we started down that road and actually started doing web based management in ‘98. So we’ve been at —

John Willis: Right I remember that.

Winston Bumpus: We’ve been at it a while and CIM today has grown to somewhere, you know 1,500 classes, some 4,000 properties and I really look at it as the DNA of IT infrastructure. Every Windows, Linux, the hardware, virtualization, OVF, all of the pieces we’ve got are building upon that information model for management. You need to — it’s a lot about the definitions, relationships, methods and things that are in it.

So we spend a bunch of time on that. We’ve also beyond the original POX version of CIM XML. In 1998 when we were doing web services, I mean we were doing web services before SOAP. How do you leverage XML and HTTP to provide management without the whole concept beyond web based enterprise management and so we’ve gone beyond that and we’ve worked on our SOAP-based protocol WS-Management, which has got a lot of traction, like I said it’s embedded in chipsets both in desktops and servers and it’s probably in every Windows machine — you know certainly since Vista on WS-Management.

CIM has been in Windows since Windows 98, a lot of people don’t realize it and there’s a lot of Linux distributions today that are implanted to provide that. Now moving up the stack, you know certainly tying that stuff to the CMDB work and what we’ve done in the virtualization profile work that we’ve done, in addition to where we’re working on the cloud stuff.

So we’re not designing — you know I don’t think it’s job of the DMTF to design a complete management system, but we certainly are trying to define the models, the data that we can agree upon to provide interoperability in this space and I think we’ve done a good job. I think millions of machines today — I think you’d be hard pressed to find an X86 machine that didn’t have some piece of its management infrastructure built upon DMTF technology.

Michele Cote: In the discussions that John and I have, I mean that’s one of the reasons that the DMTF comes up. Well I guess one of the reasons is that we’re sort of — well one of us is old enough, but we’re kind of experienced enough that we actually remember when we put out all of the CIM stuff and get excited about like weird models of things like that.

But there is like a — and John networks to the company that’s part of this at Opscode and there is this interesting kind of DevOps cloud sort of thing going on now where there is — and Cisco speaks a lot to this with their Unified Compute stuff and other people are beginning to as well, where there is a reemphasis on sort of model based configuration management in automation and things like that, where people do get obsessed with that kind of modeling effort that CIM was trying to do like I guess ten years ago now, if not a little over that and it is interesting.

There is continuous cycle of us reinventing stuff in IT, but it does seem like this is like a good time for CIM to come, like you were saying with that ubiquity of modeling and everything that already exists as a way of saying, here’s the models that exist for IT and then try figure out how to fit that into the way this kind of DevOps mentality or cloud mentality people are trying to manage their IT.

Winston Bumpus: Right, and looking CIM, as a — it is an information model, it is always intended to be. I have often said that you don’t have to use every word in the dictionary to write a book and CIM is really kind of the dictionary or a blue print of all the technology and I think we are still moving up the layers of abstraction to new model abstractions as we move into cloud. Look at OVF, OVF doesn’t implement CIM like 00:15:39 did or any other — you know the infrastructure you know, Pegasus or any of those other CIM object managers.

It is really a level of abstraction that represents those concepts in a much higher level of abstraction even in some of the cloud API’s. I think one of the things that’s really interesting is that OVF has been kind of embraced in, not only in VMware vCloud API, but other cloud API’s that are you know — the one in OGF for example, the OCCI and others as –- and so all that stuff based on CIM and when you take a look at stuff that is going on in SNIA as well as Storage Networking Industry Association, you know, all of that management infrastructure and where they are moving into the cloud space again, all built on that same fundamental building blocks.

So we are on a journey and I think the things that we work on John are what –- you know, we need people to come in and say this is something important. We don’t sit at the DMTF and say what standard we are going to create today? It is more driven by either customers coming in and saying we got a problem. Like in the case of the SMASH, it was a group of Wall Street customers that just said, you know, we are not going to buy products that have different management interfaces and you guys go figure it out and come back when you have got it done and so those are really the driving forces here.

When somebody has got a problem, you need to go solve it and there is a bunch of people willing to go work on it.

Michael Coté: Yeah, it is us industrial analysts who are just supposed to make stuff up out of thin air, so I am glad you are not getting into our business.

John M. Willis: Exactly.

Michael Coté: Sorry I interrupted you there John, go ahead.

John M. Willis: Yeah, no problem. So, the thing about, I was thinking about like one of these I was so excited about CIM you know, when we were making that kind of transition from like a missed model to an object model is that you know, when I wish you can describe the difference between where, what not and what the objects in abstraction was about, it wasn’t about what’s on the machine, which was more of a niche model, it is about what we can do with the machine, you know what I mean

I wanted to just get a little background. I spent a lot of blood, time in the Tivoli world like trying to implement CIM like architectures and I always felt that there was — it seemed to be — and I agree it’s not DMTF’s job, it is like, if you look at what Microsoft, they went really far with WMI and the idea and they kind of stopped short, but I always thought that, the idea that maybe CIM or WBEM would basically be the –- had a way how to use it, you know what I mean, tool set stuff.

How do I manage a system and that’s the place I have always been I won’t frustrated; it is just like, I wish I would have seen more adoption from a CIM perspective.

Winston Bumpus: You know, one of the things that we did there John that we didn’t have in the earlier days and maybe again this is probably a function of the website, but we have actually gone and created all these things called profiles and the profiles and again you know, it would be hard to find just looking from the outside, but the profiles actually talk about. So, you know, let’s take a power supply and I want to do power management or I want to do power management on my system.

So, there is a power management profile and in that power management profile there is a lot of used cases, so it is like, how do I you know, this used case that my –- in the power management profile is like, how do I monitor the wattage of a power supply? How do I change power states on a power supply and that is what the profile does.

So, each of one –- there is one virtualization is how do I do — there is one called the virtualization system profile, for example. So, it says, how do I pause a virtual machine, how do I suspend it, how do I resume it, how do it start and stop it. I mean these are very high level examples, but there’s profiles for each one of these things now and I think there is probably 40 or 50 profiles out there that are basically, how do you take the model and the protocols and how you actually do something useful and you might want to take a look at those, because those are —

John Willis: Yeah, I know admittedly I have a lot more research to do and I actually, I mean one of the things I was going to do is try to invite myself into some of these discussions because I’m very interested in where this — this is the right way to do it, I’ve always felt it is the right way. Now onto another interesting thing so there was a specification, I’m thinking you’re the only other person on the planet that remembers this and I’m hoping you are, so and let me back off for a second.

Winston Bumpus: Okay, I can almost guess what it is but go ahead John.

John Willis: Yeah, all right let me set up the –- do the set up and that is, it’s funny in this new DevOps movement, there’s a lot of themes about culture and clearly automation and but one of things I think DevOps is kind of really been driven is the self service, the ability for developers to really kind of control their destiny, right?

I mean the idea that they can get instances with APIs and with products like configuration management and automation, they can actually go the last mile and deploy and integrate stacks, but one of things that the DevOps community seems to be bringing up a lot that’s like, I’m like –- ah I think this happened before and the idea that’s developed –- you know exactly where I’m going but let me say this, that developers should be able to define the manageability of the — and it’s getting, the voice is getting louder, and louder, and louder, and I’m like this is done before and it was called the Application Management Specification. It somehow died an ugly death.

Winston Bumpus: Well, so here’s the issue and I probably –- because one of the books that I co-authored around 1999, and at that time it was probably only book written it was called the “Foundations of Application Management”. The title is almost an oxymoron because applications really aren’t manageable and instrumenting — and I know the AMS stuff that you were working on at Tivoli, which is that whole Application Management Specification, which is a whole concept of let’s instrument applications at the time they’re manufactured for manageability rather than trying to bolt stuff on later on and figure out how to do it right?

John Willis: Right.

Winston Bumpus: This is — you know and stuff like ARM, which is the — ARM which is –-

John Willis: Well, I’ve never been a big fan of ARM but it works. The AMS was going, it’s like here’s the thing I know that need to be modeled about myself.

Winston Bumpus: Yeah, yeah.

John Willis: Here’s the overts that are very important to me, here’s my inventory signatures, and it was rudimentary back then but if you look at what we’re doing in the WebOps and the DevOps, I mean they’re doing this kind of stuff with Cucumber, I mean they’re kind of defining it on their own now.

Winston Bumpus: You know what, what I think it’s happening in the IT space and even if you look at your desktop, I mean there was a time when describing all the applications and the relationships between it was somewhat possible but now the complexity is such and I have to say particularly in the Windows environment, I tell people you don’t install software you compile it into the OS. So it makes it really difficult to separate out and to manage those individual components.

So I have to tell you that’s kind of — part of the reason I think I emotionally punted on this issue and why OVF is so charm, because we get a new a layer of abstraction it’s like we got all these thousands of components that we put them together and they get to work and it’s perfect, great, dip it in lacquer now let’s deal with managing that. That dealing with the complexities of all the components in an application and in the platform itself, and in the OS.

John Willis: Well yeah, so I think that’s the difference though, that’s the defining line. So the application developer never worries about the underlying infrastructure, right?

Winston Bumpus: Right, right.

John Willis: That’s seamless, but I think that — I was a firm believer in AMS and I just spend my travel in dealing in this DevOps community and I’m pretty heavy into it. I mean there’s a crime, people are doing this now and at the app level, the developers want that kind of last piece they can automate everything about what they’re doing except they have –- they can’t automate the manageability of their apps and that’s still –-

Winston Bumpus: Do you know what that piece was John and I –- this is a –- it’s certainly been an area that I would — because I was Chair of the software MIF work that we did early on in ‘93, that’s how I got involved in DMTF. I said, we’re doing this software management, maybe we can create a set standard MIF and when CIM came along, I immediately ramped up the application management I think it was called the application workgroup to do the CIM model.

Certainly there’s still CIM model of the application but there was never the –- so the software developers themselves, I think we’re at a –- it was never strong desire or to instrument software for management, they assumed the platform was going to take care of it. The platform once it got the applications didn’t know enough about the application to do it, and the tools which are the third leg in the stool didn’t really have the expertise to do either.

So AMS had a great concept but it was never buy in from the ISVs on it. I’ve always — I’ve had discussions in the past in the DMTF of how we could attract or get interest from the ISVs but they were too busy trying to get that next product out the door ahead of their competitors and customers we’re dealing with — and willing to accept that it didn’t have the stuff embedded. But I feel it’s an unsolved problem. I think out there, that it’s fighting the challenge, maybe there is a different way and different constituency that maybe interested in solving it.

John Willis: Well I think the problem still exists and I think what you said with AMS was premature right, we didn’t have the technology, the idea of self service develop didn’t exist. I firmly believe — I mean they — if they would make me the king for a day, I would dust off AMS and clearly there will be lot of things that would have to be re-factored, but I think the fact when I go around telling people, when they explain this 00:26:42 I’m like, you know there was actually standard for this and we actually addressed all these concerns and it would be — I think it would be interesting if the DMTF kind of dusted that off and said, we had this thing, this is a growing — at least I see it hard in the WebOps in the large scale, the new type of fast growing infrastructure datacenters, the Flickr, the Twitter those guys, and it should be a nice way to drive DMTF into that world.

Winston Bumpus: Yeah and it really would take, to be honest John, it would take two are three companies that were really — or a group of two or three guys from different companies that would say, gee lets go work on this problem to drive everybody here, its hard to, from a platform vendor to go drive that which is really something that needs to driven by the ISV, so that’s just my sense.

Michael Coté: Yeah and I think I mean that’s one of the — its not really a side-effect but one of the exciting effects of like all the cloud stuff and DevOps is, is we are kind of emerging from this drought where developers are trained not to care about infrastructure and like its not cool for developers to like care about all this sort of stuff. I mean there is — personally I always sort of blame is the wrong word, but I attribute it to kind of right ones run, anyway mentality of JAVA and .NET and everything where you are sort of — there are various run times of programming languages where they are, “Protect you from the infrastructure” and you are supposed to ignorant in all of that.

And then as you do high scale web application stuff that cloud computing emerges from and then cloud computing, its funny, its all about knowing the infrastructure that you have and programming that infrastructure or as people say treating it as code. It does seem like a rare opportunity for things like AMS to kind — of sort of get traction again, because it definitely is — there is a certain level of maturity you get in IT management where you start to sit around and you think if we can only get those damn developers to write their stuff so it’s easier to manage, things would run a lot better.

John Willis: Yeah exactly.

Winston Bumpus: Well maybe not and the truth is where we — if we can really get the development platforms to integrate that in the bill time —

Michael Coté: Exactly. And I mean the point of what you are saying as far as having ISVs do is, I mean there needs to be that demand from everyone that translates into tools doing it and people supporting it because its — no matter how nice it seems like some theoretical efficiency is going to be unless it actually like — what was it, you quoted sometime ago, is like unless it like makes money, saves money, or does something for the government, no one is really going to care about it in IT. So it needs to fit one of those two things and it does seem like the promise of cloud stuff and we’ll see if it plays out, is one of those first two things I don’t think that government is involved at the moment but at least its good motivation to do things.

John Willis: I have to say –-

Michael Coté: I mean I should say that government is involved but I don’t think the government is involved in mandating a bunch of cloud technologies except using it for their own and things like that.

John Willis: Well the CIM work, so just kind of an update. So people have falling to 00:29:53 mantra, moving the government to the clouds of 00:29:58 the first CIO across all the government agencies and — so they are — and the DMTF has been working pretty close within this on some of the standards and they even announced on May 20th this thing called SAJACC which is the Standard Acceleration to Jumpstart Adoption of Cloud Computing.

Michael Coté: And hopefully it will be MC’d by Wheel of Fortune’s Pat Sajak, that would be fantastic.

John Willis: Exactly, I think it would be perfect. I was trying to think of an acronym for PAT, so to be PAT SAJACC but the — so they’re really looking at this whole program of looking at what standards are available, what — where the gaps are and then actually road testing these things to say, yeah these things really work and then putting them out there.

So it’s really kind of interesting to see the government being gutsy enough to take a lead on some of the stuff. Certainly what’s happening with NASA and the Nebula Project. Chris Kemp has been driving from NASA, but a lot of the government agencies are all moving down this road, Apps.gov, which has put up with lots of samples of things.

We are on the edge and its going to take some guts and some innovation to move to the next layer but its pretty exciting time and I — so my — it make money, save money, or government regulation isn’t too far off and certainly OVF is one of the things that they are pretty excited about. But we still got the app side as I think is an issue.

One of the issues that’s come up recently and I will just share this with you because it’s kind of interesting, is on just license management. I mean that’s another whole issue. As we started moving work loads around in the cloud, how do we track and monitor usage not necessarily enforce, but how do we attract it, we’ve got all these licenses that we’ve deployed and what’s the license model that we need to be tracking because I think customers are starting to get pretty nervous about moving stuff around and not being able to make sure they’re in compliance, so there’s lots of little challenges we’re going get yet to solve.

Winston Bumpus: Wanted to add that one more question about OVF is just you kind play devil’s advocate on OVF and that you know I think it is really the primary standard right now for what’s going on in the cloud and obviously got a lot of adoption. But I wonder that if one of the things I say a lot when I’m presenting about operations and new operation when I first structure is that I got to say that operations is not about cloud, it’s about a cloudy world.

And what I mean by that is the reality is that there are a lot of flavors of services, you know bear metal is going away anytime soon and you have different versions of virtualization and then you have the extreme hypervisor abstractions like the Amazons and some of the other cloud players and I’m wondering if OVF didn’t dive too deep into or in a point of time look at with the way the world looked in terms of focused as a virtual instance and what the — kind of a coupled version of an instance where many of the cloud providers for example today are very much decoupled on their service, like their storage or outside of — not coupled to the instance their services like key models and different things like that.

OVF you kind of agree that maybe that there needs to be some type of diversion now, but where does OVF go to address that if you believe it is a potential.

John Willis: Yeah and there’s been lots of discussions, you can imagine. Everything from using OVF for bare metal provisioning, certainly that’s one extreme. There’s been discussions about where do SOAs fit.

So you can do extensions with OVF and some people have started to put SLA like things, Service Level Agreement like things in OVF. Like, not only do I need this kind of — you know this many processors or this much memory, but I need — you know I want to get something with kind of response time or something. So the questions really where does all that really belong and it starting to be discussions of well maybe this stuff needs to be external and reference, reference to OVF and it’s maybe not part of the OVF. So that’s one piece of the equation.

The other piece is OVF is really deployment, but people are starting to use information in OVF further down the lifecycle, you know you talked about life cycle management. OVF can represent three or four different virtual images that may make up a web server, database server and some middleware and you can deploy that and then you can say this is the sequence that I want to start those up and that well, maybe that’s the sequence I want to take them down and then you cross the line. But people are using it for that. They’ve crossed the line from deployment to taking some of the information in OVF and using it for the run time management.

So there’s lot of questions yet, because it’s moving in lots of directions and there’s also the questions about the granularity of all of the apps and the configuration that it actually represents in that image and the management. So now that I deployed it, I want to provide some patches or do some configuration management, how much do I have there?

So it’s kind of moving — you know the discussion is moving in all kinds of directions. I think we’re trying to move fairly carefully in that, but at the same time the extension is allowing people to experiment outside of it’s intent.

Winston Bumpus: Yeah, I think that — I mean I also have a horse in the race, but I mean that’s the important thing about configuration management. There’s two schools of thoughts – there’s the school of thought that you can solve all your cloud problems with images and then there’s the school of thought that you solve cloud problems or even virtual problems, virtual instance problems, cloud and unclouded, just enough operating system and we use configuration management or desired state and system integration to glue them together.

In this way it’s always a defined state update and you blow away systems at any point. So I think that’s a piece clearly that I think probably needs to be baked in at some point.

Michael Coté: It’s always the blobs versus the scaffolding approach. It’s a nice divide to have between the two. Well, I think, I mean that was like the overview and update I was looking for and definitely there has been some ongoing questions that John was good about hitting up that we’ve always had. So unless there was any other updating about the DMTF is up to. I think that’s a good way to close it out.

Winston Bumpus: So I think it’s terrific. I appreciate the time and it’s always good talking to you Michael and John and there is a lot of good stuff going in DMTF, anybody listening to this wants to get involved go to dmtf.org and if you have any questions you can always pop me an email at [email protected] and glad to answer any questions or provide any help I can.

Michael Coté: Yeah it sounds like there’ll be an exciting new website some time in the future as you referenced several times.

Winston Bumpus: Yeah may come up with two.

Michael Coté: I mean we really appreciate you being on and we’ve been looking forward to it so that’s fantastic. Thanks so much.

Winston Bumpus: Great. Thank you very much.

Michael Coté: And with that, we’ll talk with everyone next time.

Disclosure: OpsCode, where John works, is a client. Be sure to check the RedMonk client list for other relevant clients.

Recent Comments