“Is this Silicon Valley or Hogwarts School of Witchcraft and Wizardry?

Over the last 18 months or so, this question has become tougher to answer as a flood of products with names like Voldemort, Hadoop and Cassandra have appeared on the scene.

They are part of a new wave of highly specialized technology — both software and hardware — built by and for Web titans like Facebook, Yahoo and Google to help them break data into bite-size chunks, and present their Web pages as quickly and cheaply as possible, even while grappling with increasing volumes of data. Facebook, for example, created Cassandra to store and search through all the messages in people’s in-boxes.” – Ashlee Vance, “Big Web Operations Turn to Tiny Chips”

If you study the French Revolution, the odds are good that at some point a professor or author will invoke the pendulum metaphor. The aptness is clear, as history adequately demonstrates a tendency for human events to swing back and forth from one extreme to another. Which is precisely what we’re seeing in technology today, as we swing from general purpose back towards specialization.

From the mainframe’s heyday to the Unix wars’ fragmentation to the ascension and dominance of Windows, technology has periodically vacillated between extremes of general purpose computing infrastructure to more specialized hardware and software combinations. As ever, what is old will be new again.

What’s new these days, then, is the old preference for specialization. Just a few years ago, application development was a simple proposition. You picked an operating system – general purpose, of course – a relational database (duh), a programming language, middleware to match and you got to work. It may yet be that we come to remember those with wistfulness, a nostalgic remembrance of simpler times.

Even the most basic of decisions today is fraught with the paradox of choice. Consider the long time standard LAMP stack. The explosion of options for persisting data has been well documented (coverage): the time when storing data meant choosing which relational database you wanted are, for all intents and purposes, over. Relational databases like MySQL remain a popular choice – and absolutely the most popular for the backing of existing applications – but it is no longer the only choice. General purpose operating systems like Linux remain the dominant deployment option, but that preeminence in increasingly threatened by cloud platforms which abstract the operating environment and appliances which make it irrelevant. Programming language fragmentation meanwhile, has been well underway for decades, so there’s nothing new there.

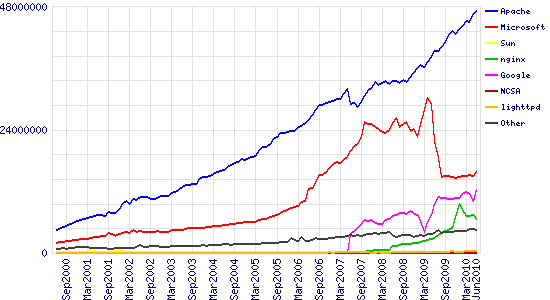

But what about Apache? Surely the venerable web server remains the most popular platform for deploying web applications? Well, yes, it is. Here are Netcraft’s numbers for overall web server share.

And yet there are signs that Apache’s dominance is challenged for what Netcraft terms “top sites.” See the following:

<img src="http://news.netcraft.com/wp-content/uploads/2010/06/wpid-overallc.png" alt="Netcraft Web Server Survey"

Some will undoubtedly argue that Netcraft’s numbers are imperfect, and they will be right. But the fact is that developers are increasingly weighing the merits of Apache versus new, built-for-purpose alternatives such as nginx, Node.js (coverage) or Tornado. Ryan Dahl’s presentation on Node examines some of the potential limitations of the Apache thread-per-connection model versus event-loop based alternatives, and some very bright developers are listening. The success of Node et al doesn’t mean that Apache isn’t still the most popular choice, of course: it simply means, in what is becoming a familiar refrain, it’s no longer the only choice.

Because there are an ever growing library of more specialized alternatives available.

Nor is the specialization bug merely infecting the software world. As Ashlee’s piece documents, there are more and more hardware startups popping up. Their raison d’etre, almost universally, is that current hardware models are not ideally suited to scale out workloads. Besides the SeaMicro’s and Schooner’s of the world, there is evidence that firms like Google are acquiring ARM expertise with the intent of leveraging it for specialized servers. Microsoft is reportedly exploring similar hardware, as is Dell.

Doubtless many of these efforts are better classified as experiments than actual commitments to specialized hardware. And the marginal benefits of the specialized hardware, in many cases, may not outweight the costs, whether those lie in design and production or lengthened development and deployment cycles. But as we see in software with projects like Facebook’s compiled PHP, HipHop (coverage), if the scale is sufficiently large even incremental gains in power, performance or both can be financially material.

Purveyors of specialized hardware are quick to point out that the benefits may extend beyond mere performance and power gains, however significantly differentiated it may be in either. Custom-fitting software to hardware can introduce benefits that are either impossible to achieve on general purpose infrastructure, or not economically feasible. Certainly this was one of the guiding principles behind the Sun Fishworks project (coverage), which stands for Fully Integrated Software and Hardware…works). Presumably new owner Oracle agrees, given the depth of their commitments to the appliance form factor in Exadata.

How all of this plays out in a market context is yet to be determined. Clearly, developers are actively embracing the shift back towards specialization, but the traction for specialized hardware either on a stand alone or appliance basis is still small relative to the current market size. As I told Schooner’s Dr. John Busch when we spoke a week or two ago, various players have tried to push appliances as a mainstream server option in the past: all have failed. Outside of categories like networking, security and storage – each quite large, to be sure – specialized hardware has been a difficult sell.

What might be different this time around is that on a macro basis, the market is showing an increased appetite for specialization at every level. Combine that with a driving need to maximize performance while minimizing the expense, both in power and datacenter floor space, and the opportunities may be there where they traditionally have not been.

As to when the pendulum will shift back towards general purpose, all I can tell you is that it will at some point. The inevitable result of an explosion of choice is a reactionary market shift away from it. All this has happened before, and all of this will happen again.