One of the buzziest buzzwords of 2024 has been agentic AI. The concept of an AI agent can be nebulous: sometimes a human is in the loop along the way, sometimes not. Sometime the input will be based on an LLM, sometimes it will be smaller more tightly trained model.

But generally, the philosophy of agentic AI moves away from “a person prompting an AI assistant question-by-question” into a world of systems and workflows. This post from Simon Willison is well worth reading for those interested in the topic.

The pricing shift from assistant-based to agent-based is going to be interesting to watch.

Many of the AI assistant tools are priced at least partially on a per-seat basis. In a world of agentic AI, when the output of the system is not necessarily driven by human activity, I suspect usage-based pricing will reign. And in particular, I suspect output tokens will be the key driver.

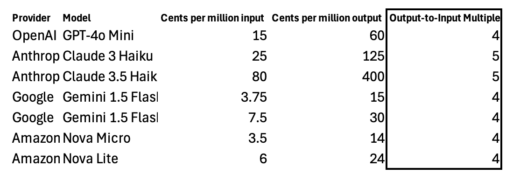

For existing models, cost/million output tokens is already more expensive than cost/million input tokens. (See Simon Willison’s comparison of model pricing update after the release of the Nova models as a reference point of how large providers are pricing as of December 2024.) The price differences between providers and models is less important for this analysis than the noting the consistency at which the output tokens are 4-5x more expensive than the input tokens.

This explanation about the differences in input vs. output memory usage is the best rationale I have found about why output tokens are priced 4-5x higher than input tokens. That said, this is a fast moving field and this comment is from May 2024. If anyone has other explanations I’d love to hear them in the comments.

The technical reason why is input and output pricing varies is interesting, but the in the end the pricing is it’s own reality, at least for the moment. As such:

Workflow-based systems rather than user-based systems combined with output-driven resource usage drives my hypothesis: output tokens are going to be the primary price lever of agentic AI systems.

No Comments