TL; DR: Dockers enterprise picture is being completed, gaps remain. The focus on on-boarding developers is relentless.

We had the opportunity to attend DockerCon 2016 in Seattle at the end of June. Docker are continuing to build on the momentum from previous conferences, and once again the buzz and energy around the event was palpable.

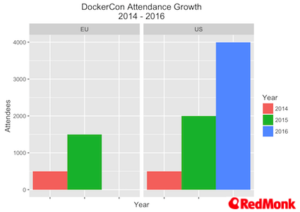

In terms of attendance, the growth in the size of DockerCon is genuinely impressive. One of the more interesting aspects of the attendee base this time was the number of people focused primarily or exclusively on operations versus pure developers. This is beginning to mirror the changes that Amazon have seen in the AWS Summit attendees over time, and is critical to the enterprise story for Docker. Although the age profile is changing, it is still significantly lower than that of many other vendor events.

Storytelling as a Service

.@theVSaraswat, Lily Guo & @golubbe doing a lovely, v. funny keynote demo #dockercon <<lots of vendors could learn from this, humour wins 🙂

— Fintan Ryan (@fintanr) June 21, 2016

One of the nicest aspects of the DockerCon keynotes was the incorporation of an element of comedy. Docker as a company are still young enough to easily get away with a somewhat irreverent approach to their keynotes (as opposed to a clichéd DJ or bad rock band before your keynote starts).

As someone who attends a lot of conferences, and an awful lot of buzzword laden keynotes, take it from me, humour will go a long way in making your event far more memorable – when it is delivered properly. In the case of Dockercon, the demos from both keynotes were both entertaining and reasonably informative around the relevant products.

Developer Experience

Unless you have been living under a rock for the last few years, you will be more than aware that Docker have had a developer lead growth strategy. Here at RedMonk we are forever telling companies about the importance of packaging, and making that initial developer experience as seamless as possible.

Part of that overall developer experience is getting started, specifically the first couple of minutes after you start using a tool. Docker have been, and are, explicitly focusing on this area with their work on Docker for Mac and Windows, which went into public beta during DockerCon (quick disclaimer, I was part of the Docker for Mac private beta program, albeit not a particularly heavy user).

The focus on the initial developer experience for Docker will pay off in terms of the on boarding experience, executing on all the pieces that come after getting started, the day two experience, is key to enterprise adoption though.

Docker Orchestration & Swarm

The big news was the announcement of Docker 1.12 (which was released at the end of July), with the inclusion of Swarm directly in the Docker engine. Now there is quite a lot to dissect in what this means but at the highest level the goal, in Docker CTO Solomons Hykes own words, is to make the powerful simple.

Totally on point from @solomonstre on 'make the powerful simple' #DockerCon << complexity kills tech projects

— Fintan Ryan (@fintanr) June 20, 2016

There is a focus on four areas with Swarms integration into Docker – these being removing external dependencies (read key value stores such etcd and consul here), adding a cryptographic node identity, a service level api (in the cloud native world applications are collections of services, which is a concept many people struggle with) and a front end routing mesh including DNS based service discovery, load balancing and more.

Now there are questions one can raise about each area, but from a simplicity standpoint for users who do not want, or need to, understand the underlying mechanics of deploying and scaling a distributed application, yet want to get up and running very, very quickly, it is very hard to argue with the approach.

On the competitive front the Swarm integration is squarely aimed at Kubernetes. While diverging in terms of functionality and approach, the question has to arise of “good enough”. Kubernetes has tremendous momentum, and a vibrant community around it, but if Swarm meets the use cases for a large segment of customers, and is your initial onramp? Then the question many would ask is why change.

Interestingly we analysed a set of survey data from Bitnami which included orchestration choices, earlier versions of Swarm scored highly, far higher than many people expected – it will be interesting to see if the integration of swarm directly into Docker 1.12 accelerates this adoption.

Docker Distributed Application Bundles

Docker also announced a new experimental feature, Docker Distributed Application Bundles (DAB). The how people go about bundling, deploying and scaling an application as opposed to individual services is a subject of much discussion. While it is too early to say where DAB will go, using the service level primitives as a building block / manipulation point appears makes sense. Arun Gupta over at Couchbase has put together a slightly more detailed intro to DAB on the Couchbase developer blog.

The closest analogy in other systems is Helm Charts for Kubernetes, which originated as a project from Deis and Google. Given that both projects are under active development it will be interesting to see the directions both take, and if any truly significant difference in the overarching approaches emerges.

Security as a Differentiator

Security is emerging as a key battleground in the containers space and Docker are doing their utmost to position themselves strongly in this area.

The key message from Docker, again, is simplicity for developers, but coupled with powerful features for operations and compliance. The idea being to make security as simple as possible for developers to use, essentially by making it transparent and invisible to them. This eases the adoption curve, and making software more secure by default can only be a good thing.

Two of the key components that Docker were keen to highlight here are Docker Security Scanning and Docker Content Trust, both of which have seen significant incremental improvements over the last six months.

The evolution of security with containers is far larger than we will cover here, and we will be providing a more detailed analysis around Docker security, and that of the wider container security ecosystem in the coming weeks.

Docker Data Center

For Docker their main enterprise offering is Docker Datacenter, which brings all of their offerings together. During the day 2 keynote we were joined by Keith Fulton, CTO of ADP. Now if you don’t know ADP they are one of the largest providers of payroll software and more general HR software in the world. More importantly they operate at a serious scale, with all the problems and challenges that go with that, and they handle salaries – nothing will cause disquiet among employees more than not getting paid.

As we noted already security will be a key differentiator in the enterprise marketplace for containers, ADP’s security requirements are stringent.

.@ADP talking up @docker content security, container scanning & trusted registries<<@ADP have v. real & significant security reqs #dockercon

— Fintan Ryan (@fintanr) June 21, 2016

ADP were keen to highlight the flow and interlinking content security in terms of only running signed binaries, automated container scanning, and Docker Datacenters ability to limit what can actually be run, and the ties into Docker Swarm for scaling applications.

Alongside this developer productivity was once again highlighted, with a focus on microservices. As is the case in all large organisation ADP are dealing with a huge amount of legacy, and they view microservices as a way to increase velocity on the new product side, and open up opportunities to refactor the legacy monoliths.

Overall ADP is a very interesting use case, and we will be watching how their journey goes with interest.While betting heavily on Docker, it is far from the only container technology they are working with.

Docker for AWS & Azure

In keeping with the overall message of simplifying things, Docker for AWS and Azure was also announced. This service is still in beta, but the underlying premise is to make it as easy to deploy a docker swarm on AWS or Azure as possible.

Part of the play here is interesting, the use case is very specific, and people are using tools such as Terraform from Hashicorp to achieve similar goals. The question, like Swarm, will be if this is more than enough for many use cases.

Docker Store

Docker also announced the Docker Store, a marketplace for official docker images. It will be very interesting to see what take up we see with this approach, marketplaces have varying levels of success depending on the provider. We have had conversations with several vendors, and in general they were all positive, the consensus being that Docker Store could be a relatively low friction distribution channel with the potential for a lot of upside

As of now Docker Store is still in beta, but expected to GA later this year.

The OCI Format Debacle

There is, unfortunately, no getting away from the current elephant in the room. And sadly this does take away from many of the positives at DockerCon. However, it would be remiss of me not to address the recent controversy around the Open Container Initiative (OCI) head on.

Communities take a lot of work, with a focus on consensus building, and post DockerCon we have seen a very public set of statements and argument around the direction and validity of the Open Container Initiative (OCI) and respective standard.

To say the commentary on the OCI has caused a level of disquiet among the wider technical community would be somewhat of an understatement. Since this particular tweetstorm we have had multiple conversations with people ranging from end users, third party vendors, contributors, interested bystanders and outright hostile competitors. All have expressed concern about the tone and nature of the statements. It is also very easy to be dismissive of this whole episode as a storm in a teacup, but it is not.

The value, and commercial battles, around containers are not, and will not be, at the lowest container level. It is all about the services above, the pieces that enable businesses to operate. People will choose to enter at different levels of abstraction, but it will always be focused on applications and what the eventual user experience and goal is, not the plumbing beneath. The lowest parts of any technology stack almost always get commoditised, which is what allows an ecosystem to emerge on top of them.

To quote Ben Golub during DockerCon “nobody cares about containers” – he is right. Businesses care about ROI from their technology investments. However, they also care, deeply, about what has occurred in the past with technology lock in at very parts of the stack and take careful note of the comments emanating from vendors (the current concerns around JEE are another example of this, albeit at a different point in the stack, which Oracle are beginning to address head on).

Docker have done an amazing job of making something very complex very simple to use. They also showed a lot of leadership, and maturity, during the formation the OCI with competitors such as CoreOS. While some complained about the scope of the OCI spec, it did, and does, give reassurance to many people that a base level of compatibility will be maintained across the various tools that use containers. Docker are rightly concerned with competitors saying tools provide Docker support when they do not, but that is, again at a higher level than the very base image format. That is where to compete.

To truly lead, Docker needs to continue to engage with the OCI effort, not dismiss it as a fake standard done to appease others.

Disclaimers: Docker paid for my T&E. Docker, Deis, Oracle, Couchbase and CoreOS are current RedMonk clients.

No Comments