So I like GNOME Do. That’s not exactly a secret. Nor am I the only one.

While it’s obviously – and admittedly – highly derivative of the OS X Quicksilver application, it’s a nice piece of work. One that continues to evolve into a nice platform, not to mention the rare application that has reshaped my desktop and the way I use it.

But none of that is what I’m interested in writing about – apologies for the digression. What I personally found fascinating about the 0.5 release was the way it continues the blurring of the lines between network and client application that I’ve been expecting for some time.

Like the dutiful Linux application it is, Do can be installed via a variety of package management systems (Ubuntu users, here’s the PPA for the latest and greatest bits). Which is entirely unexceptional, of course. What is new for Do, and somewhat exceptional for other applications we could discuss – web and otherwise – was the plugin architecture.

Previously, Do plugins were installed via the following:

Download the DLL files, put them in ~/.local/share/gnome-do/plugins and then restart Do.

Functional, but awkward. It also repeats the mistake that I seem to recall (but can’t find) Matt Mullenweg admitting to with WordPress: losing control of the plugin repository.

Mark Shuttleworth, I think, was right when he said:

It seems that one of the key “best practices” that has emerged is the idea of plug-in architectures, that allow new developers to contribute an extension, plug-in or add-on to the codebase without having to learn too much about the guts of the project, or participate in too many heavyweight processes.

Everything from Firefox to the Do in question affirms this.

But I would go one step further and contend that, for the sake of both project owners and users, the plugin architecture needs to be enabled by the network. It should support seamless, inline integration with the project itself; not burden the user with discovery, installation, and maintenance. Linux distributions have benefited from this approach for years, and it would seem that the lesson is slowly being understood and adopted by the application layer. Witness the most recent Firefox vintage allowing for search and installation of plugins via a modal dialog box – not a trip to a webpage. Is this not apt for the browser?

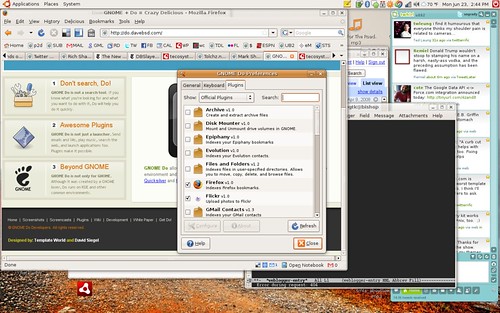

Do’s learned this lesson, it would seem, as the plugin experience is now a dialog box (pictured above) backed by a network repository. Which, to be sure, can lead to a new class of scaling problems. But such demand problems are discrete and targetable; a fragmented, disorganized and non-indexed far flung forest of plugins is by definition less so.

Consider the advantages to a centralized network library of plugins:

- Streamlined, one-click installation

- Centralized discovery of plugins

- Permits “Official” and “Unofficial” plugin designations

- Makes possible community ratings, usage and other metrics useful to both developer and user

- May reduce plugin-caused bug reports (via Official/Unofficial)

- May provide usage telemetry back to project developer

And so on. The benefits of network enabling the plugin architecture are such that it’s reasonable to ask: what took so long?

Anyhow, it is thus that I like Do personally for what it’s achieved, but follow it professionally for what it represents: the ongoing conflation of network and client. There’s a long way yet to travel, but we’re getting closer to applications that treat the network as an assumption rather than an afterthought, as the design and architectures increasingly reflect, both in the open source world and out of it.