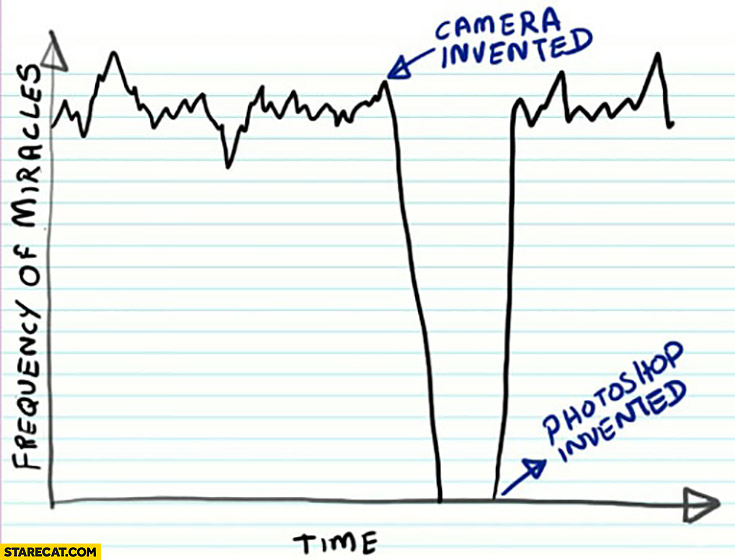

Image source: Starecat

Content Authenticity Initiatives

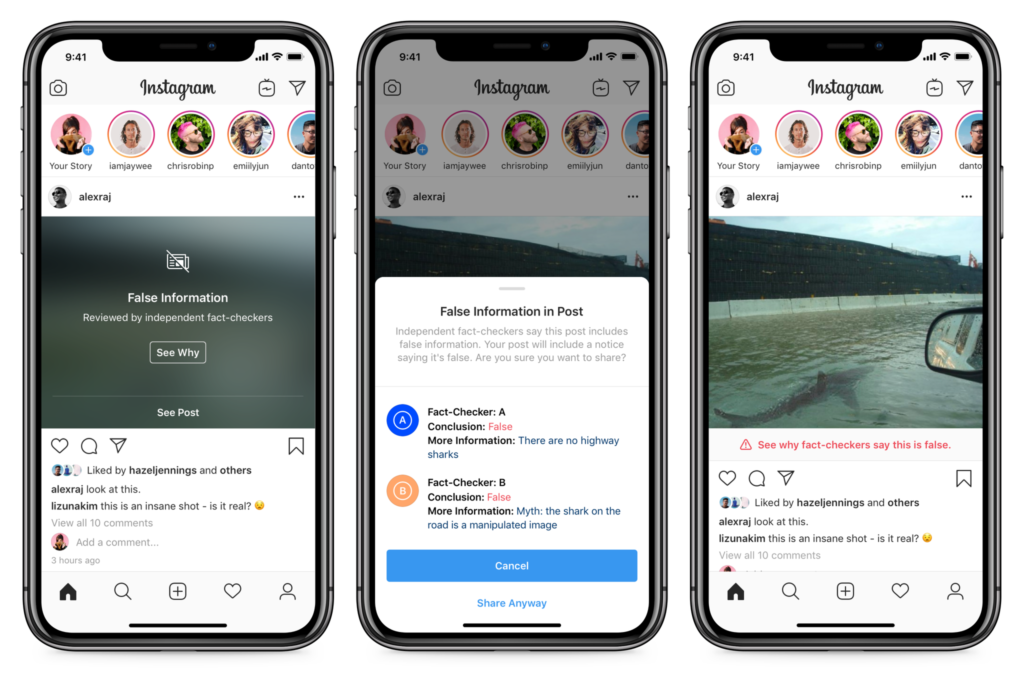

At the end of 2019, Instagram posted about its new policy for flagging potentially misleading images in a post entitled “Combatting Misinformation on Instagram.” Instagram describes the program as follows:

We want you to trust what you see on Instagram. Photo and video based misinformation is increasingly a challenge across our industry, and something our teams have been focused on addressing. […] Today, we’re expanding our fact-checking program globally to allow fact-checking organizations around the world to assess and rate misinformation on our platform.

When content has been rated as false or partly false by a third-party fact-checker, we reduce its distribution by removing it from Explore and hashtag pages. In addition, it will be labeled so people can better decide for themselves what to read, trust, and share.

In a similar vein, Adobe’s Chief Product Officer Scott Belsky has announced similar concerns about the ability for users to discern when media has been edited. At Adobe’s 2018 flagship event, this concern was expressed from the keynote stage as:

“We understand that this technology prompts new and important questions about how we can make sure it’s used responsibly, and how we can also use technology to detect when content has in fact been edited. We are always working and talking with partners, media companies, and others about how to address these important issues, and we will continue doing so.”

In October 2019, Adobe returned to their keynote stage with more details about how they intend to do this. At this year’s Adobe MAX, Belsky announced more more details about what their initiative might look like.

Adobe is pairing up with Twitter and the New York Times to launch an industry-wide content attribution standard across mediums. The high level plan is to create an opt-in system where creators can use metadata tags to record where an image came from, who created it, and any edits that were applied.

The goals of the project are 1) for creators to receive credit for their work and 2) for consumers to be better able to evaluate the authenticity of the content they see online.

It’s still early days for both initiatives from Instagram and Adobe, but on the face it seems like their missions should be largely aligned. However, problems are already emerging in how misleading content will be treated under these systems.

The first major sticking point that has emerged amongst these approaches is how to deal with digital art.

Curbing misleading images is good, right?

One the one hand, a prime example of the spread of misleading images emerges with every major hurricane. The edited image of the shark swimming on the highway inevitably pops up across social media, causing undue concern and alarm. This is the example Instagram uses in its post about limiting misleading information.

Image source: Instagram

It seems wise to limit the spread of false and concerning images that cause public alarm or confusion.

But what does this mean for the creators of digital art?

Ce n’est pas de l’art

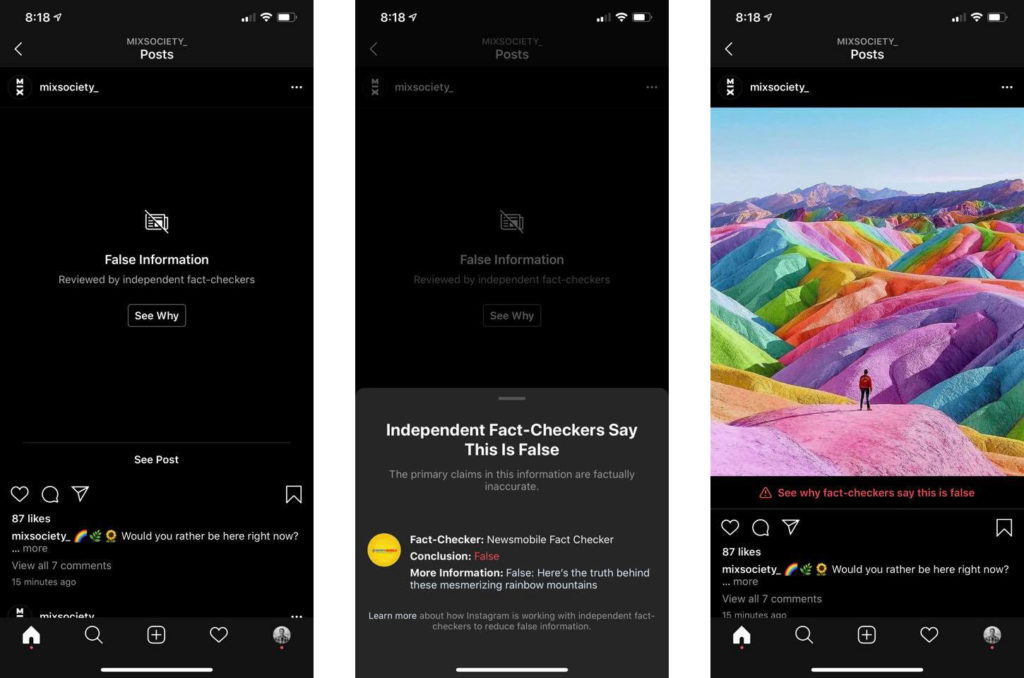

As reported by The Next Web, Instagram has started applying these misinformation standards to artistic images as well:

San Francisco-based photographer Toby Harriman was scrolling through his Instagram feed when he suddenly spotted a post flagged for “False Information,” PetaPixel writes. It was a first for him, so he clicked through the warning pop-up, only to find it was simply of photo of a man standing on a rainbow-colored hill.

Image source: Next Web

From this perspective, the problem of how to deal with edited images seems less clear cut. How to flag artistically edited photos seems especially difficult when paired with another of Adobe’s 2019 keynote announcements: Photoshop Camera.

Photoshop Camera “reimagines smartphone photography” as the world’s “first software programmable camera.” Through Sensei (Adobe’s name for their AI capabilities), users can edit and apply lenses/filters in real time as they take photos. The product is expected to GA in early 2020.

“With Photoshop Camera you can capture, edit, and share stunning photos and moments – both natural and creative – using real-time Photoshop-grade magic right from the viewfinder, leaving you free to focus on storytelling with powerful tools and effects.”

Source: Introducing Adobe Photoshop Camera

The keynote demo featured edits that were more artistic rather than photorealistic, but it does lead to questions about what verifying images in the future looks like. What’s art and what is misleading? If artistic photo editing is in question, what does real-time editing mean for the goals of content authenticity and trust?

Digital Art

There are many potential concerns in how we societally need to approach edited images. This tension between creators and distributors is just one microcosm of these tensions.

The line between creative expression and manipulative images is not clear cut, and as the digital world becomes more entwined with the physical world, it’s going to be increasingly difficult to separate.

We want a world with art. Companies like Adobe focus on creating tools for content producers, and thus are understandably interested in a content authenticity solution that both allows for artistic distribution and for authorship tracking.

We also want a world where there is a collective understanding of trustworthiness. Content distributors are working on solutions to ensure their platforms aren’t used to massively spread misinformation. Companies like Instagram (owned by Facebook) are understandably concerned about making sure their platform reach is used responsibly.

Both approaches will have merits and flaws. Multiple conversations and approaches to content authenticity and trust are necessary and laudable, and we as an industry need to be involved in shaping these discussions and decisions in their various forms.

Disclaimer: Adobe is a RedMonk client and paid for my T&E to MAX.

No Comments