TL; DR: NVIDIA begin to roll out their packaging of key deep learning toolkits and an easy abstraction for working in AWS. Life has just got easier for people working on deep learning on AWS.

At GTC last May NVIDIA announced their GPU Cloud (NGC) and at its core was their deep learning stack – a curated set of software, distributed as containers, that they planned to make available to end users of GPUs on various cloud providers. The first of these cloud offerings came out this week in conjunction with the announcements of NVIDIA Tesla V100 GPUs availability on AWS.

Packaging exercises are very important, and as we noted at the time of the original NGC announcement:

This is a critically important strategic move for NVIDIA. It is a cliché to say software is eating the world, but it is a cliché for a reason. The risk for vendors primarily associated with providing hardware is how easily they can be dropped from any strategic conversations. By packaging up the various components and becoming an essential building block of both the software and hardware toolchains NVIDIA ensure their place in the conversation going forward. As we like to say at RedMonk in any software revolution the best packager almost always wins.

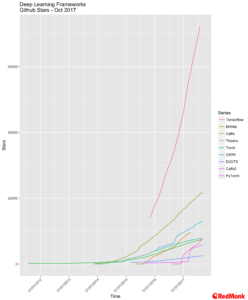

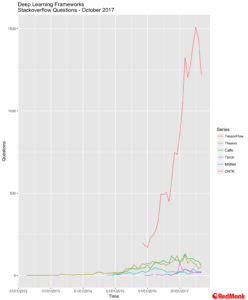

Deep Learning Framework Popularity

We have looked at the popularity of various frameworks in the past. When it comes to deep learning frameworks, NVIDIA have explicitly chosen a combination of the most widely used (e.g. TensorFlow, MXNet), alongside the ones they are directly involved with (Theano, DIGITS).

While we now see TensorFlow as the general entry point for most people getting started with deep learning, we still see a variety of solutions being used. This makes sense, the various frameworks have different strengths and weaknesses, and with an advanced user base moving between them is not overly difficult. TensorFlow is displacing Theano, but given its heritage this also makes sense.

All that said TensorFlow has a massive amount of momentum behind it, and its current pace of adoption shows no signs of slowing down.

The Future?

NVIDIA were clear in their initial announcement that they wanted to support all the major cloud providers. For now, Amazon have an edge with the launch of their new p3 instances, with support for the Tesla V100 GPU. Given the foothold NVIDIA currently have across the cloud providers, providing GPUs to Amazon, Microsoft, Google, Alibaba, IBM and more, we anticipate relatively quick rollouts across the other providers, with NVIDIA providing optimised runtimes.

Now this wide spread availability of both GPUs and an optimised stack raises some interesting questions – could we see workloads being split across different providers if a general level of abstraction exists? It is very early days for such functionality, but as we see people working towards cross cloud portability for certain workloads types with technologies such as Kubernetes, could we see the same for deep learning workloads? Data gravity notwithstanding.

As for the raw hardware? In terms of general availability NVIDIA have a significant lead in the market. However, we are watching with interest the ongoing developments at Intel under the Nervana brand, particularly their pre-announced NNP chip and the associated developer program. Like NVIDIA, Intel are focusing their efforts on optimisations for several of the leading frameworks such as TensorFlow and Caffe. Early days, but we do anticipate rapid progress from Intel once they ramp up their own program with the cloud providers.

Packaging will ultimately drive adoption however.

Disclaimer: NVidia, Amazon, Microsoft, IBM and Google are all current RedMonk clients.

No Comments